Here are some pictures about the history of energy.The subject is historically very interesting as the concept of energy and entropy are also related to physics and have been clarified surprisingly late and with some difficulty. The preface to the textbook of Lewis and Randall puts the scientific process quite poetically:

| “There are ancient cathedrals which, apart from their consecrated purpose, inspire solemnity and awe. Even the curious visitor speaks of serious things, with hushed voice, and as each whisper reverberates through the vaulted nave, the returning echo seems to bear a message of mystery. The labor of generations of architects and artisans has been forgotten, the scaffolding erected for their toil has long since been removed, their mistakes have been erased, or have become hidden by the dust of centuries. Seeing only the perfection of the completed Whole, we are impressed as by some superhuman agency. But sometimes we enter such an edifice that is still partly under construction; then the sound of hammers, the reek of tobacco, the trivial jests bandied from workman to workman, enable us to realize that these great structures are but the result of giving to ordinary human effort a direction and a purpose. Science has its cathedrals, built by the efforts of a few architects and of many workers. In these loftier monuments of scientific thought a tradition has arisen whereby the friendly usages of colloquial, speech give way to a certain severity and formality. While this may sometimes promote precise thinking, it more often results in the intimidation of the neophyte.” |

[March 26 update: apropos “construction”. The story of entropy is definitely still under construction. It is also interesting. Entropy even has a cameo in a recent Holywood movie: a clip from the recent movie Arrival. More should be said about entropy and its history but unlike with energy, the confusions are still present today especially in the context of “entropy of the universe”, where already Planck pointed out that it is problematic to apply it here. The universe is by definition everything we can access. It is not in a heat bath of a larger system. Still, for a finite subsystem like a black hole, one can define entropy. One notion is the Bekenstein-Hawking entropy which is a quantity proportional to the area of the event horizon of the black hole. The name entropy is then just chosen by analogy and in order to investigate whether information can disappear in a black hole violating the second law of thermodynamics. (What is meant with S0, the ordinary entropy outside the black hole is less clear.) There is some mathematical explanation why the surface area is a natural notion: If we take the uniform rotational symmetric probability measure on the sphere of radius r, then the entropy is the logarithm of the surface area. Now if we look at the rate of change then this is

which is close enough to make sense, especially since the entropy of the rest of the universe So is not defined well anyway, except that one would imagine just to have a finite set of black holes and the total energy being the sum over all the entropies of the holes as well as the entropy of some hypothetical “heat bath” obtained by radiation but how would that make sense for two black holds who have never had the opportunity to exchange radiation until now. An other way to make mathematical sense for the universe, one has to assume that that the geometry is a finite geometry with some internal energy. This is not the point of view of most physicists, even so loop quantum gravity comes close. What is probably meant is a thermodynamic model in which the language of statistical mechanics, developed for complicated systems like gases is extended to the motion of celestial bodies subject under gravity. One can then understand that the geometric part is in the “heat bath” of the processes (from process given by electromagnetic, weak and strong forces which are overlooked when looking at gravity) which are not under consideration. There are problems of course because the universe is so large that different parts do not interact, which makes a thermodynamic picture very questionable. Additionally, the gravitational potential is unbounded so that mathematically, one could get infinite energy by having two bodies collide. This is not a problem for a physicist who would truncate the Newton potential once the bodies are so close that other forces become more relevant, but it is a problem for a mathematician even to justify the thermodynamic assumption in a Boltzman gas (which assumes some stochastic collision rules) is tremendous (the mathematical difficulties are illustrated e.g. the Lanford theorem or by one of Simon’s 15 unsolved problems asking whether the n-body problem has global solutions for almost all initial conditions. But a physicist is rarely interested in such existence results especially since in the second problem one has only to investigate non-collision singularities which do not exist in a relativistic mechanics). A very radical approach breaking with all the physics known is to look at finite systems. If one looks at a finite system, it requires to know the finite probability distribution on the finite complex. One could then assume that it is an equilibrium measure for Helmholtz free energy, as the first law of thermodynamics dF=dH-TdS=0 suggests. But we have seen in the Helmholtz article that for a very simple model and already if the universe is a triangle (!), the free energy is a discontinuous function of the temperature. The story is complex therefore already for very small universes. But lets go back to notions of entropy in which the universe is not just a finite set: as a mathematician, one has to point out that the functional S on probability measures is not such a nice functional on measures. It is not at all continuous, even if we take the weak * topology on measures. If one looks at measures different from absolutely continuous measures or finite point measures, one has to say exactly in which sense one understands entropy. Its trivial to see that the entropy can not be continuous: on finite measures the entropy is always larger or equal to zero. But on absolutely continuous measures, it can be negative. The functional is also unbounded even if one looks at measures on a compact space, where the space of probability measures is a compact set. Actually, on finite intervals it is the entropy of the uniform distribution and the minimum on [a,b] is

which is negative if b-a ≤ 1. So, to be on safe ground, one either has to assume that the measures under consideration are nice absolutely continuous or then finite discrete measures. For a mathematician this is vexing as Baire generically a measure is singular continuous. So, for most measures, neither the notion of Shannon entropy, nor the notion of differential entropy works.

April 10, 2017: A blog entry of Sabine Hossenfelder about the reason of interest in the information problem for Blackholes.]

Some History highlights

|

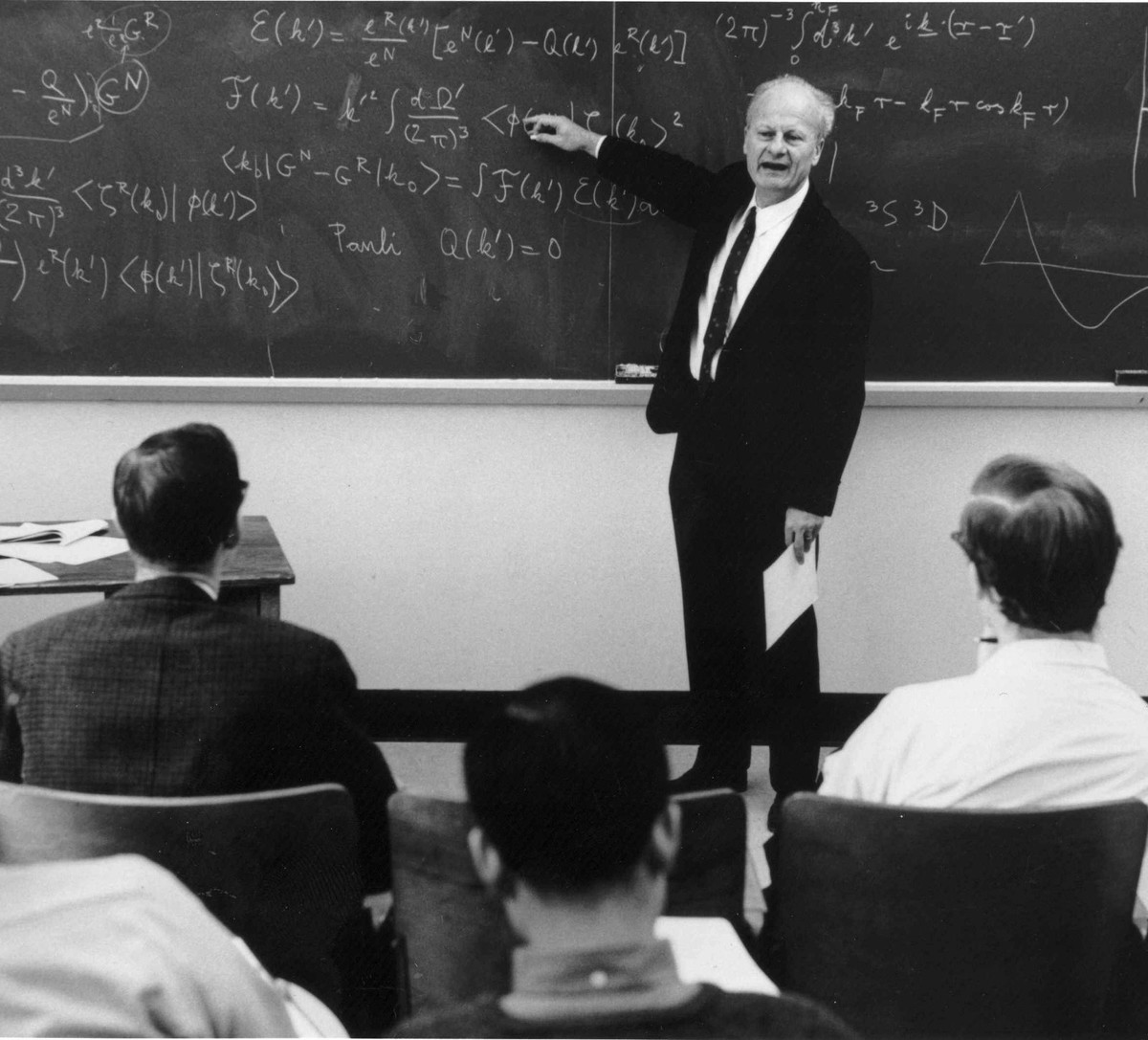

The history part in this wiki article shows a bit of the story on the concept of thermodynamic free energy. Here is a summary with some illustrations and additions about some main discoveries in the area of energy.

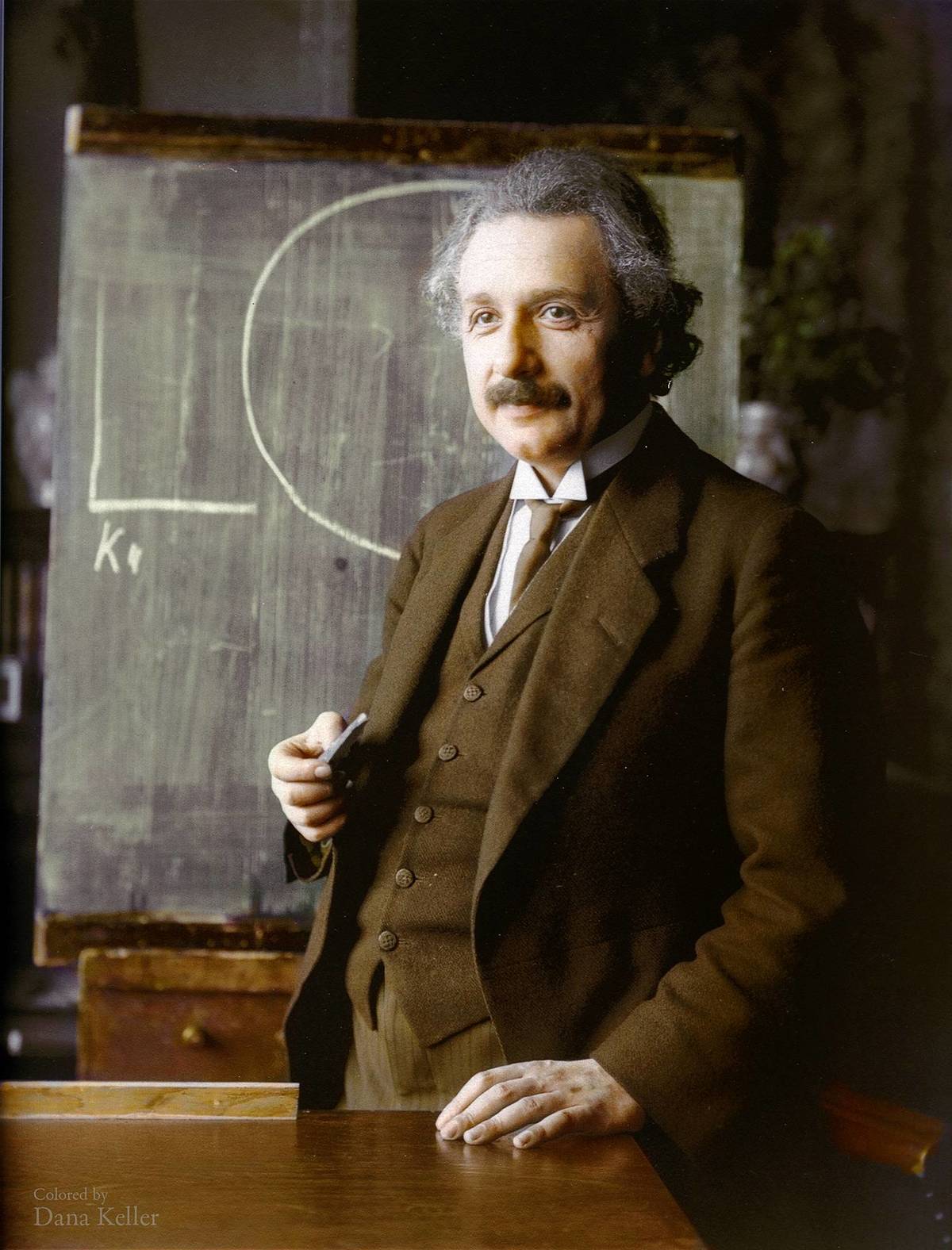

By the way, the NOVA show Einstein’s big idea gives a good popularized well-told time-line too: |

The textbook of Lewis and Randall

The textbook of Gilbert Lewis and Merle Randall, from 1923, was the main reason for replacing the term “affinity” with “free energy”. Quote:

“Thus we know that whenever a condition of equilibrium is reached in a chemical reaction, the free energy change of the reaction is zero. (…) In the early days of the first law of thermodynamics, before the second law was fully understood, it was assumed as a matter of course that the most efficient utililization of a chemical reaction for the production of work would consist in converting all the heat of that reaction into work. In other words, the quantity dH was assumed to represent the limiting quantity of work which could be obtained under the conditions of maximum efficiency. In some quarters this idea has persisted to the present day. However, we have just seen that it is not dH but dF which measures the maximum capacity for performing useful work. And these two quantities are not equal unless the entropy of the system in question is the same at the beginning and end of the isothermal reaction under consideration. This is shown by apply F=H-TS to an isothermal process which gives dF-dH=-TdS. According to the sign of dS, the work obtainable in a given reversible process may be greater or less than the heat of the reaction. It is true that, according to the first law, the external work performed must be equal to the loss of heat content of the system, unless some heat is given to or taken from the surroundings, but this is precisely the point first clearly seen by Willard Gibbs. When an isothermal reaction runs reversibly, TdS is the heat absorbed from the surroundings and if this is positive the work done will be even greater than the heat of the reaction.”