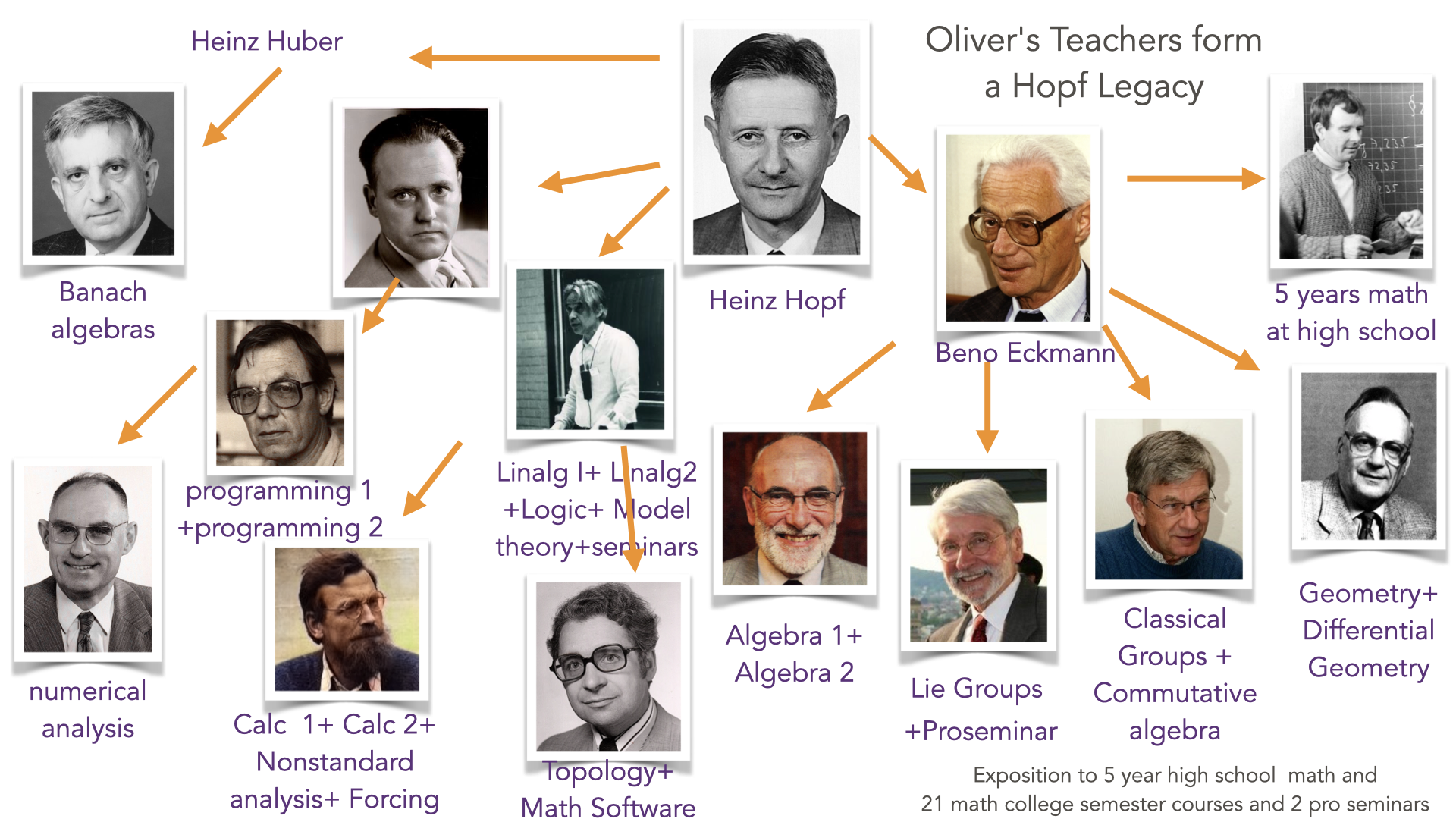

When I had been studying mathematics at ETHZ, taking two semesters of calculus and at the same time taking two semesters of linear algebra had been mandatory. My Calc 1 (1a/1b here) and Calc 2 (21a here) teacher from the first year was Hans Laeuchli. His advisor Ernst Specker during the same time was my linear algebra teacher for two semesters. Both are on the genealogy line of Heinz Hopf since Specker is a “kid” of Hopf and Lauechli so a “grand kid” of Hopf. By the way, also my high school teacher Roland Staerk (teaching us math for 5 years at the Kanti Schaffhausen) was a grand kid of Hopf because Staerk was a “kid” of Beno Eckmann. Many of my teachers were kids of Hopf: Urs Stammbach (algebra 1 and 2) (math 122, 123 here) or Max-Albert Knus (commutative algebra) or , Guido Mislin (Lie groups) or Ewin Engeler (mathematical software and computation) or Peter Henrici (numerical analysis) a student of Eduard Stiefel who is a student of Hopf. Then Max Jeger (both geometry and differential geometry) who was also a student of Stiefel and Eckmann and so a descendent of Hopf. And then Peter Laeuchli, who was our computer science prof for two semesters (then called numerics even so it was a pure CS course, I think tougher than CS 50, we had to program in written exams in Pascal on paper and pen completely closed book, you had to know and speak the language by heart!). I unfortunately never met Stiefel, who died relatively young.

I took two other courses with Laeuchli: Axiomatics (especially Goedel stuff) and Nonstandard analysis. Laeuchli used Nelson’s approach (internal set theory IST) and started his course with a section “Hokus Pokus”. My notes are here [PDF] I was very impressed by non-standard analysis and once even (in a Specker seminar) presented a proof of Fuerstenberg’s recurrence theorem in the language of non-standard analysis. I once promoted non-standard analysis in the context of dynamical systems and analysis to Moser (my undergraduate advisor) and showed him a nonstandard proof of the Stone-Weierstrass theorem. He cautioned me that this might not be a good thing to follow. He was right. The language of non-standard analysis is not widely used, still today. If you talk a language which nobody speaks, also nobody listens. I found it still useful to “think like a non-standard analysis person” but just not “talk like one”. It is magic. And Laeuchli called it “Hokus Pokus” for the right reason. For example, the extreme value theorem for continuous functions on a compact set is almost trivial as there is a finite set of points which is infinitesimally close to any other point. Just evaluate the function on these points and take the maximum …. And now take the standard part of that number and you have a point where f takes its maximum. IST is based on an axiomatically sound extension of ZFC, which is ZFC+IST. It is a language extension and this language is more powerful but equivalent to the classical mathematics.

Lauechli not only taught me facts, he also taught “taste” and “insight”. This is the real value of higher education. I have described in 2015 an epiosode about Specker and Laeuchli testing me in logica in this document (on page 41 of that PDF). Hans Laeuchli, my calculus teacher not only could do magic (Hokus Pokus) but also provide with concrete gems like the following one:

Side remark (written while feeling a bit in a depressive mood, especially in the prospect of creativity. Lets first get back to Laeuchli: The Laeuchli episode reminded me: Teaching “taste” and “insight” is key. This is the real value of higher education. Especially in a time of AI, knowledge itself appears to have become cheap. But there is more to knowledge than just facts. There is also selection and emphasis and judgement. Lets rant a bit about higher education in general: higher education is in our modern time under heavy attack. Much of the critique is self-inflicted. If colleges start to put ideologies before truth then, they risk to be defunded. Education should not be part of politics or religion or ideology. \Everybody with a little bit of self-awareness knows that our educational institutions have been infected also by ideological activisms (some I myself support even). Fortunately there has been a trend recently to reverse this and this is good so. A good opinion piece has appeared last spring by Steven Pinker. Pinker also talked then much about this in public, like here. School should stay neutral in any political, religious or ideological spheres. Of course, each member should be able to express opinions. The principle of free speech which is important. But the institution itself should remain politically and ideologically neutral. Not only universities, also news have become extremely partisan and political, to the point that we just can no more trust them.

Additionally to the danger of appearing to become ideological temples and get defunded, technology has in the last 25 years more and more nibbled away from what had been done at the schools themselves. In 2000 when I started here, almost all was still house made. Much of this structure is gone. I made once a slide illustrating this: it shows that there were times in 2021 when we used 18 companies for teaching: https://people.math.harvard.edu/~knill/warmup/technology.html .Not that this is all bad. It was probably necessary as the world has become more complicated and much more fragile. (I could in 2005 still put a webcam freely on the web, I could access my computer freely from outside, there were scripts that blocked IP addresses that tried to come in and I already 20 years ago had the machine behind a firewall). Today it would be impossible.

That was the attack of the “tech companies” on higher education. The next wave has come. It is no in the form of AI. Education as a whole is under attack (all of it, teaching, research and administration) We live in a time, where students can buy pens which scan a text, connect to chat GPT and get an answer. Soon it will be built into glasses, then into the brain.. It is a brave new world. It is even a brutal new world for humans. Warnings have been under estimated by far. It has become much more dangerous, much faster than we anticipated (even Kurzweil, whom everybody laughed at when he talked first about the “singularity”). His prediction has come true earlier than he predicted) . But lets get back to Laeuchli: while the math he taught us during those years is pretty standard, it was more than that. Teachers can provide insight and more importantly also taste One can ask, what’s the point of learning all this, if I can look it up. This is a hard question. It is tempting to fall into nihilism. I myself get sometimes depressed about the prospects that we used to aim for a world where we can be creative and have fun learning and get assistants to do all the things we do not like to do and what happens is that the creative part, the writing, the thinking is taken over by machines first – while we still have to wipe our own bottoms! That might be the only thing which makes us humans special (until machines start actually eat and anti-eat and so will need to wipe their bottoms) I just wanted to see this drawn in AI “show a bot wipe its bottom” and the answer was “Sorry! Our AI moderator thinks this prompt is probably against our community standards.”No skill was involved drawing this picture of course. AI use is too cheap. (*) It is really sad. I myself gain satisfaction by writing and thinking myself, without machine telling me what to think or write. Its the path that counts, not the destination. We want to be, not to have, to say it with Erich Fromm.

While I think to have been one of the earliest proponents of AI at least here, (we got then a grant in 2003-2004 from the Harvard provost to build AI bots on a server) I now think it might be time to just “say no to generative AI”. It makes us dependent, it makes us stupid. It demotivates. It renders us to be a child who can do only what its “mommy” allows us to do. It steals content from creative minds. without giving credit. It demotivates building content. It is too easy to use. A monkey can turn in a PDF and submit the answer. A monkey can hold a Chat GPT pen over a text and get the problem solved. (*) The user does not even have to be able to read. There is no skill at all involved in using AI. Zero. There is this stupid add “disappear for 3 weeks and learn AI and become dangerous”. I would say: “yes, disappear and use AI, and remain there trapped into a technology which not only replaces you, but also makes you weak and powerless and dependent. Its more like: Disappear and vanish completely”. What you learn by using AI is the first thing which AI can do better. AI forces us to become infantile idiots because it pretends to do the thinking for us and it actually does it pretty well already. We walked past the Turing test 1 year ago (one can see this in math olympiad contests, as in chess, the machines are better than humans already in solving also tricky math problems). Chess computers actually killed my own interest in chess. Soon, the machines will be doing math research better than us. And nobody prevents a company to build 10’000 super strong math researchers of fields medal strength, once they are once able to build one! And I want to see the human who can keep up with the productivity of 10’000 super strong artificial mathematicians. At first of course, universities will keep humans in the loop (just to pretend to have some empathy), but if it becomes cheaper and more productive to just pay for some super expensive LLM’s exclusively written to do research or teaching or administration. It is then where the time for humans is over. Well this looks pretty bleak. That’s why I already 6 years ago got interested in plumbing (see my plumber stories). And my most recent bathroom project at home. It looks more and more that plumbing tasks will be outsourced to mighty machines much later than the job of finding and proving new theorems!

(*) this reminds me of the wonderful movie a fish called Wanda where Otto says: “Apes do not read philosophy” where Wanda answered “yes they do Otto. They just don’t understand it”. This pretty much hits the nail also with the use of AI. Apes can use use AI and solve problems. They just don’t understand it. The problem is much more severe than in other parts where we have lost control, like when using a device like the iphone, we do not have the understand the workings of its interior. When driving a car, we do not need to understand its technology. But at least, these devices and objects had been created by humans. They were assisted by machines yes, but it must have been fun to for the engineers. But if the design itself is also done by machines, if the assembly is done by machines, if the companies building it is run by machines, will humans still get the wages to buy them? We know from economics, that it is all about value. And it looks as if the value of humans is in decline.

As for now, my personal “activism” is to dislike on social networks any thing that looks AI generated. (*) I don’t read papers that are AI generated. I don’t referee papers that appear AI generated. I don’t listen to music that appears to be AI generated. There might come a time, when we can no more distinguish between AI generated stuff and human generated stuff, we might then have to ask authors or researchers to create live. It is a bit like in rock climbing. You can always claim to have climbed a route and there, uncut video footage is needed. There is still hope that we will start to understand what makes us human. As for me, I try to stay independent. If the internet with all servers would go down tomorrow I could continue do my work. I have never become dependent on any of the “cloud” stuff already. I keep my photos, music files, video files all local. If google would disappear tomorrow, if AI would disappear. No problem. I still have my library both real and electronic books, music I like stored physically on my own hard-drive as an MP3, movies I like physically as MP4. I can continue to write. I can continue to work on paper. If electricity would go down, even if solar panels would stop working and all technology would disappear, I could still remain productive with paper and pen with almost no penalty, maybe I would be even more productive. Maybe it will be like in the good old AI movie of Spielberg from 2001: there could be rebellion against machines. That movie tried hard that the viewer roots for the main characters, which were all bots, but the general public rebelled and arranged games in which the bots would be tortured. AI was a disturbing movie already at a time, when no real AI was in sight. The reality might be much more disturbing even. But who knows. Nobody was every able to predict the future. It might all turn out completely different.

(*) in 2022, when chat GPT appeared, I tried to influence it by giving misleading feedback hoping it would get more stupid. Chat GPT was relatively stupid back then already claiming for example that a “vector is something with magnitude and direction” but it did not help. The models have become more and more powerful. Today, one can find the stupid definition for vectors only in textbooks and taught in schools. But already back in early 2023, one could argue with the AI and ask “but does the 0 vector have a direction?” which already then made the AI click.