Inconsistencies?

Image Source: part of Epimenides text here.

Mathematicians have worried about inconsistencies since thousands of years. One of the first documented examples is in the Epimenides paradox. The Cretan poet and philosopher famously made around 600 BC the statement “All Cretans are liars”. It is an example of a statement which is neither true nor false (which is kind of surprising at first as know that either something is true or false (“tertium non datur”)). This self-reference appears today like an amusing word game or joke and certainly is entertaining. But as explained well in the popular book Gödel-Escher-Bach of Douglas Hofstadter, it is at the heart of the foundation of mathematics. (Goedel essentially constructs a theorem which tells: this theorem can not be proven within the system using so the self-reference of the Epimenides joke.) As it is known now, it is impossible to prove the consistency of a strong enough mathematical axiom system within the system itself if the system is consistent. (If the system were inconsistent we can of course prove anything, also its consistency … but then we are in a deep puddle of mud).

Image source: crop from Goedel and Einstein picture.

A metaphor

Illustrated in a metaphor, we can never know whether there is a killer theorem “1=0” provable within a strong enough system which destroys the axiomatic setup. Most of us (myself included) are not concerned about this, similarly as we are not concerned about a killer asteroid hitting and annihilating life on earth. Just in 4 days, on the 19th of April 2017, a rather large asteroid will pass 1.1 million miles from earth. The asteroid 2014 JO25 is 650 meters wide. This is still small but would be terrible if it would hit. The “dino killer” was between 5 and 15 km wide. Fear from an asteroid would be irrational as the danger probabilities of other hazards or risks is much higher. Similarly, in mathematics, the danger that a theorem is wrong but accepted by the community is much larger. Especially in a time when papers are judged by who wrote it and not by the content. Many proofs of theorem are so complex that nobody has read understood them completely. There are many theorems which are so complex that they are never taught and proven in classes.

[Example: in ergodic theory one knows the beautiful theorem of Pesin. The proof is difficult to understand and others must feel the same way: as of today, I’m not aware that any proof has written down which is comprehensible and teachable. (The theorem tells that for a volume preserving diffeomorphism T of a compact manifold, the positivity of all Lyapunov exponents on a set A of positive measure implies that the dynamics of T restricted to A is measure theoretically conjugated to a Markov chain.) Even the book of a grandmaster which is on the subject does not prove the theorem. The danger that the Pesin proof is wrong is very small, but the possibility there is a gap is orders of magnitude larger than the danger than that an inconsisteny of ZFC will develop. (P.S. There is a monograph of Katok on Strelcyn on an extension of Pesin theory to maps with singularities which indicates that the theory has been reviewed and verified enough to be accepted now (see this literature list from my undergraduate thesis.) Still, Pesin theory has not passed the teachability and expository test yet. ]

Image Source: part from this picture.

Keeping watch

While few mathematicians worry about the inconsistency problem, it can be wise to be aware of the danger and prepare for eventualities, similarly as NASA keeps a near earth object study center. Being aware allows to build safe guards if the catastrophe should happens. What about the inconsistency problem in mathematics? Some mathematicians have thought about it. Edward Nelson for example (who was an outstanding mathematician writing many good books in probability theory, nonstandard analysis, stochastic processes, arithmetic and mathematical physics, his website), worked in the last part of his life on the inconsistency of arithmetic and claimed at one point to have found an inconsistency. Nelson was no crackpot. Indeed, if you look at the books and papers he wrote, he must be considered one of the clearest minds in the mathematics of his time. There is some discussion here on a blog of John Baez. Nelson retracted his claim. The consensus today is that the “Nelson asteroid” has passed us safely and that the Peano arithmetic P can is considered safe but as we know (and this is a theorem of Goedel), we know also that we can not prove that we always will be safe. Yes, we can possibly build a larger system in which the consistency can be proven, but then this system is not proven to be safe. So, is there a safe bunker which will protect us from annihilation?

Image Source: part of Bunker near Opicine.

[April 20, 2017: Nelson in 2006 wrote a controversial essay “Warning Signs of a Possible Collapse of Contemporary Mathematics” in which he deals with the concept of infinity in mathematics. Even a finitist can not avoid infinity: from the first page of this article [PDF]:

Is infinity real? For example, are there infinitely many numbers? Yes indeed. When my granddaughter was a preschooler she asked for a problem to solve. I gave her two seventeen-digit numbers, chosen arbitrarily except that no carrying would be involved in finding the sum. When she summed the two numbers correctly she was overjoyed to hear that she had solved a mathematical problem that no one had ever solved before.

From page 3: But how do we know that ZFC is a consistent theory, free of contradictions? The short answer is that we don’t; it is a matter of faith (or of skepticism). I took an informal poll among some students of foundations and by and large the going odds on the consistency of ZFC were only 100 to 1, a far cry from the certainty popularly attributed to mathematical knowledge.

From page 5: Notice that no concept of actual infinity occurs in P, or even in ZFC for that matter. The infinite Figure 1 is no part of the theory. This is not surprising, since actual infinity plays no part in mathematical practice (because mathematics is a human activity carried out in the world of daily life). Mathematicians of widely divergent views on the foundations of mathematics can and do agree as to whether a purported proof is indeed a proof; it is just a matter of checking. But each deep open problem in mathematics poses a challenge to confront potential infinity: can one find, among the infinite possibilities of correct reasoning, a proof?

From page 6: The notion of the actual infinity of all numbers is a product of human imagination; the story is simply made up. The tale of ω even has the structure of the traditional fairy tale: “Once upon a time there was a number called 0. It had a successor, which in turn had a successor, and all the successors had successors happily ever after.†Some mathematicians, the fundamentalists, believe in the literal inerrancy of the tale, while others, the formalists, do not. When mathematicians are doing mathematics, as opposed to talking about mathematics, it makes no difference: the theorems and proofs of the ones are indistinguishable from those of the others.

And from page 7: Hilbert is revered and reviled as the founder of formalism, but his formalism was only a tactic in his struggle against Brouwer to preserve classical mathematics: in his deepest beliefs he was a Platonist (what I have called, as a polemical ploy, a fundamentalist). Witness his saying, “No one shall expel us from the Paradise that Cantor has created.†Hilbert’s mistake, which a radical formalist would not have made, was to pose the problem of proving by finitary means the consistency of arithmetic, rather than to pose the problem of investigating by finitary means whether arithmetic is consistent. Godel’s second incompleteness theorem is that P cannot be proved consistent by means expressible in P, provided that P is consistent. This important proviso is often omitted.

]

A finitist bunker?

As Gödel has shown, there is no way to avoid the inconsistency danger by extending the axiom system. It might be possible to prove the consistency in a larger system but then that larger system has the problem again. As in the Hawking story with the old lady arguing about turtles all the way down, the axiom systems built on top of each other look like turtles all the way down.

Image source: part of this page.

What to do then? One way is to restrict the axioms and only allow finite notions. This is the finitist approach. But as the Nelsons asteroid indicates which passed us close, this is hardly a solution as already within finite mathematics, there is the potential infinite. A more radical approach is “strict finitism” or even Ultrafinitism.

A difficulty with ultra finitism is that one has never a sharp bound on what one still should consider acceptable. A strict finitist in the time of Euclid would put an other bound on what is acceptable as a modern mathematician who can access powerful computers. And then there is also theoretical progress. We might in the future develop finite language and mathematics to deal with large numbers like

πππππππ

and decide whether it is rational or not even so we have no way to realize this number. Currently, such numbers are not part what a strict finitist would consider acceptable. We know that the set of primes is not finite even so we can never look at all of them. In other words, whether an object can be considered in strict finitism depends on the state of the art. My own attitude is that I trust results more if I can compute them explicitly, possibly with a computer. Now, there is also the danger that a computer makes mistakes (remember the Pentium bug) or that a programming language is buggy. Especially if the source code of the language is not given, we don’t know of hidden errors or (much less likely hoaxes. We would not know for example if a programmer would have decided not to like the prime 179424673 and do something different with a computation if that prime appears).

[Added april 23: even if all source code is open and “self programmed”, there can be danger. Here is a story from my time in the army (military service is mandatory in Switzerland). The cryptology group there had there an independently developed integer arithmetic system written in Pascal, everything was written “in house” from scratch, no external code except of course the pascal compiler was used (actually, some of the folks in the CS part of the group knew everything about operating system and had helped building systems like Oberon). I implemented with a colleague the Morrison-Brillhart and quadratic sieve in Pascal. We were almost finished, when suddenly things started to fail. Debugging pointed to a location where the square root of quadratic residue modulo a prime was computed. More investigation revealed that the square root routine was buggy, which was puzzling as it worked before. It turned out the square root routine had been optimized on a fundamental level during an upgrade and that had still been a subtle bug. Anyway, it illustrates that we have to trust basic computations (and especially libraries). A computer is programmed by humans or (more and more also programmed by machines). Having all tools correct, is not guaranteed. And even more, as our own brain is a computer too, we also have to worry about more basic things: we have to trust for example that we can store information reliably in memory. Back to the basic arithmetic: implementing a long integer arithmetic seems not that complex but there are challenges. The routines have to be rock solid, fast and optimized. Even long integer multiplication multiplication can be optimized using Fast Fourier transform from a certain threshold on. There are routines like the discrete log procedure or then the integer factorization procedure which constantly need to be adapted as the theory evolves. For the later, a cocktail of algorithms, depending on the size and nature of the number had been used (its probably still similar today). First start with a basic baby test, then run Pollard-Brent rho, continue with Morrison Brillhart, quadratic sieve or number sieve algorithms and finally throw in elliptic curve factorization algorithms. Computer algebra systems like Mathmematica are pretty opaque about what they are doing to factor an integer. Its certainly also a cocktail but which mix is trade secret. I myself use also Pari for simple things as pari/gp is more open.]

Hope

To finish this short post on an optimistic note, I don’t believe that we had a severe problem even if somebody would prove the inconsistency of Peano arithmetic in the future. We were able in the past to deal with paradoxa and even turn them to our advantage. The earliest one, the Epimenides paradox was later even shaped into a “gem in mathematics” (Goedel’s theorem). Similarly, an inconsistency could propel mathematics into an other realm, we do not even dream about now. Imagine a Greek mathematician looking at the mathematics we have today with all the geometric, analytic, algebraic, topological, combinatorial or probabilistic structures. It is much richer and more interesting than the mathematics which the Greeks were pondering. Their worries (like the existence of irrational numbers, the parallel axiom in the axiom system of planimetry, or the Zeno paradoxa) sound now rather naive and are completely resolved, sometimes led to entire new worlds. The mathematics of the Greeks is now taught early in school. The research frontiers have moved to much larger and even more interesting constructs. A crisis like the development of an inconsistency might even have a positive effect. In the past, every crisis (examples are the the irrationality crisis (which led to much richer number systems), the parallel axiom crisis (which led to much richter non-Euclidean geometries), the infinity crisis (which led to modern set theoretical reformulations of mathematics), the incompleteness crisis (which led to a richer axiomatic landscape), the problem of divergent series (which led to analytic continuation or renormalization theories which in a completely rigorous way solve the infinities), the non-solvability of the quintic (which led to Galois theory) were all beneficial. Actually, in each of the cases we are aware of, the absence of the crisis would have been the disaster! We would been stuck with integers, planar geometry, complicated formulas for the solution of the n-degree polynomials etc. Also the computational limits we have (Turing, non-ability to determine whether a Turing machine halts or not etc) or limitations to integrate functions (which led to differential Galois theory) make mathematics more interesting.

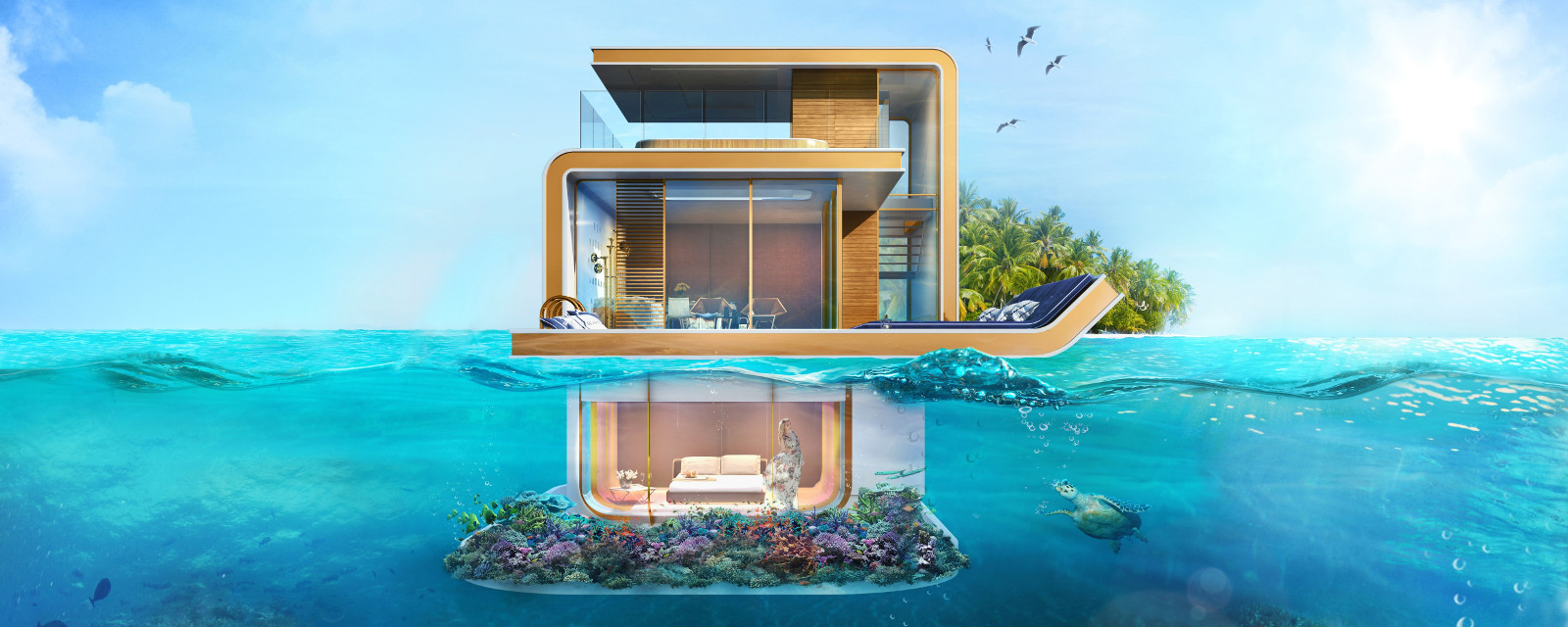

The need for a “bunker” might even lead to new ideas and structures, illustrated by this architectural design of a cute bunker:

Image source from this picture.

Some people

Here are a few pictures of mathematicians:

| Leopold Kronecker (1823-1891) was a forerunner of intuitionism. Having contributed substantially to number theory and algebra he is in particularly famous for his quote “God made the integers, all else is the work of man.” |

Image Source. |

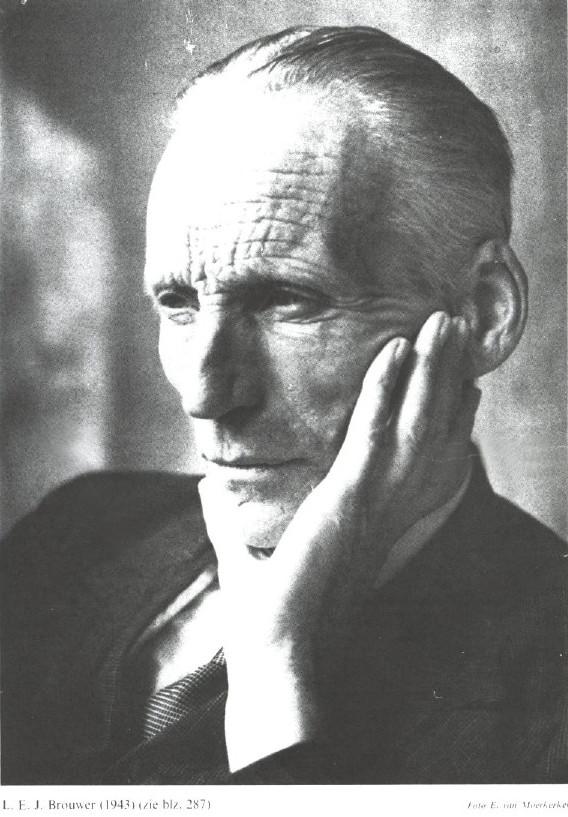

| Luitzen Egbertus Jan Brouwer, 1943 was a Dutch mathematician who lived from 1881 to 1966. A Bio. A student of Diederik Korteweg, he contributed substantially to topology (Brouwer fixed point, topological invariance of dimension, simplicial approximation theorem) then founded the mathematical philosophy of intuitionism. |

Image source. |

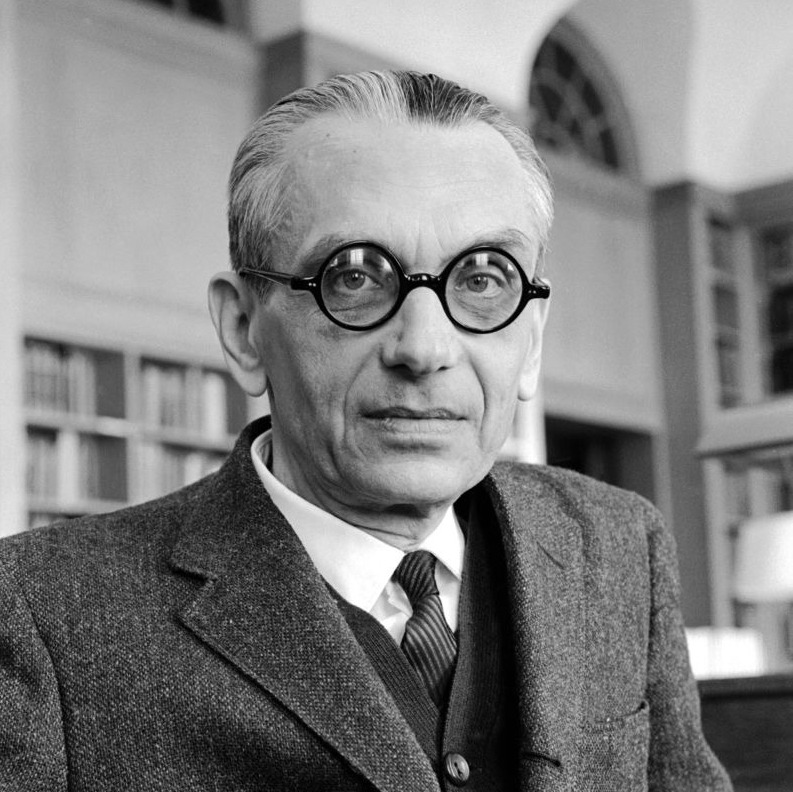

| Kurt Gödel (1906-1978) published his incompleteness theorems in 1931. |

|

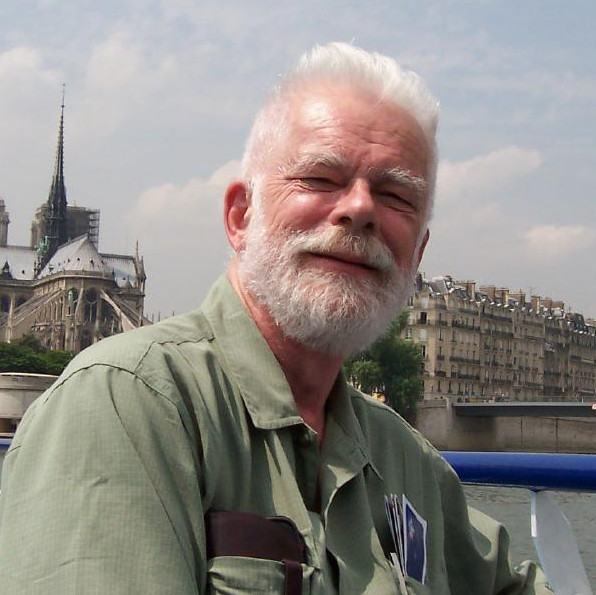

| Edward Nelson (1932-2014) worked both in mathematical physics as well as in mathematical logic. After announcing iin 2011 having found an inconsistency in Peano arithmetic but later retracted. |

Image Source. |

| Erwin Engeler has published extensively about meta mathematical questions as well as relations of the foundations of mathematics with computer science (which naturally leads to finitism or even strict finitism). A great book is “Metamathematik der Elementarmathematik, 1983” (english: Foundations of mathematics, 1993). |

Image source. |

| Harvey Friedman is a modern logician, a student of Gerald Sacks. Friedman once formulated a grand conjecture saying essentially that finite set theory is enough to prove or refute all finitary mathematical statements. He calls this “practical completeness”. See for example Unprovable theorems in discrete mathematics. |

|