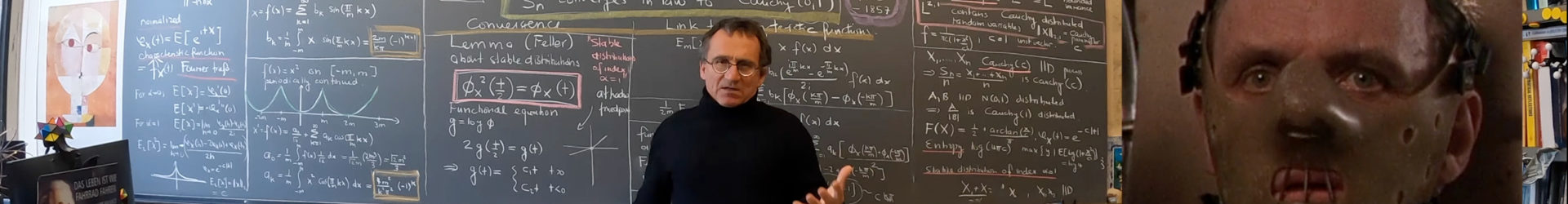

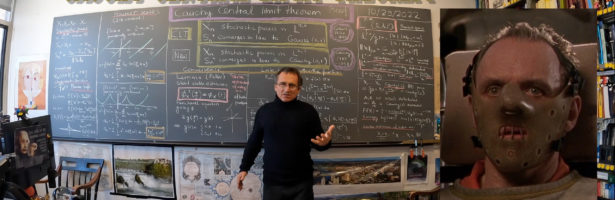

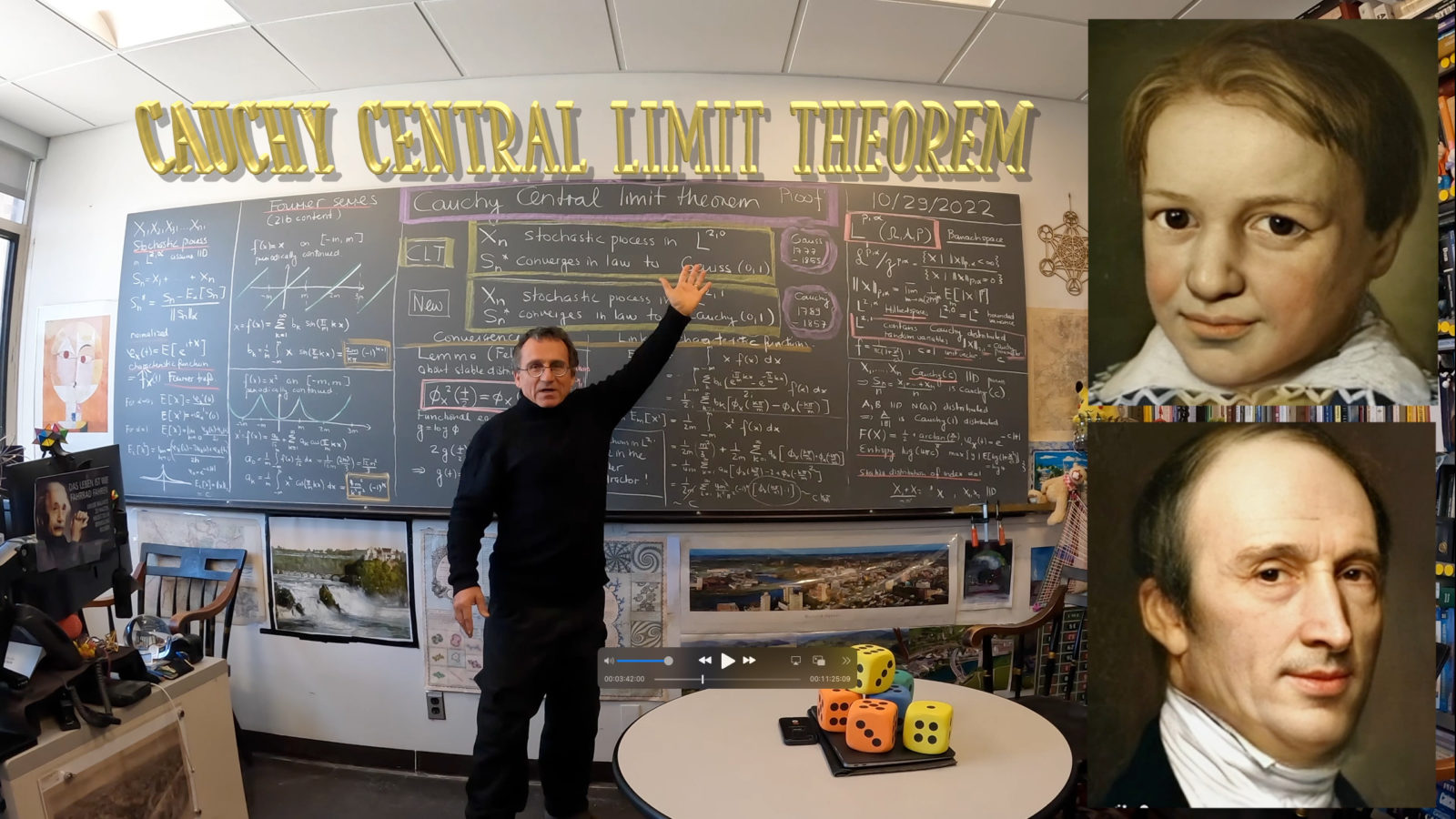

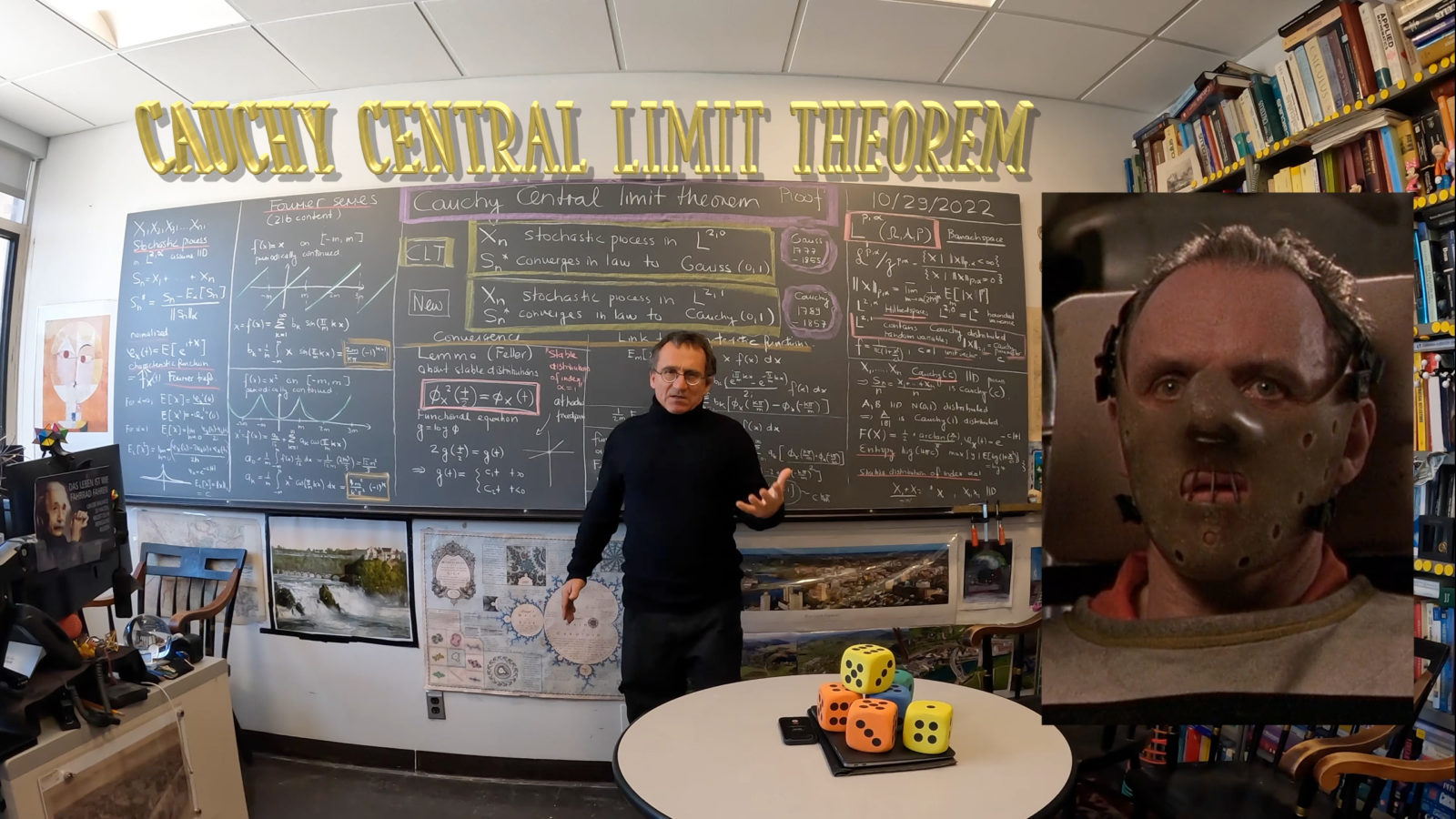

There were two updates on the Cauchy central limit theorem telling that if the Cauchy mean and risk of a random variable X with PDF f is finite and non-zero, then any IID random process

with that distribution has normalized sums

which converge in distribution to the Cauchy distribution. There are results of Paul Lévy describing in terms of the characteristic function to have convergence. This is based on a rather simple fixed point result telling that the

of the characteristic function

satisfies the fixed point equation

so that if $latx g$ has one sided derivatives at 0 different from 0 and infinity and is even, then

must be the fixed point. Indeed, the renormalization map

on even functions converges. By a general Zeckendorff argument the convergence of

is equivalent to the convergence of

. As for working with distribution, one can find in the book of Kolmogorov-Gnedenko a theorem of Gnedenko which describes in terms of the CDF , whether we are in the attractor of the Cauchy distribution. What I want to do is to have the risk condition alone decide whether we are in the attractor. We only need to show that non-zero, finite risk implies that

(or

) have one sided limits at zero. This is not so obvious. While the function

is uniformly continuous, the one-sided limits do not necessary have to exist There is a beautiful theorem of Polya which assures that

comes from a probability distribution. Sufficient is that $\latex phi$ is convex and symmetric and

and

. Under this Polya condition the one sided derivatives exist at every point! But most distributions do not satisfy the Polya condition. One can construct densities

for which at some points

, the derivative function

has a devil comb oscillatory singularity and I believe one can have this even on a dense set of points but I do not think this can happen at 0. But for Cauchy, one only needs to show that under the finite risk condition to be the case. By subtracting and adding a suitable Cauchy distribution function one now only has to show that if the risk functional for a function

is zero, then its Fourier transform

has a derivative at

. Classically, if the mean is zero and variance is finite then

is even twice differentiable and

is a maximum. A Fourier computation similarly as done in the video should allow to conclude that in the zero risk case, we still must have both

. I had been thinking about this problem and the videos helped a bit. But it is not finished.