Arithmetic with networks

The paper “On the arithmetic of graphs” is posted. (An updated PDF). The paper is far from polished, the document already started to become more convoluted as more and more results were coming in. There had been some disappointment early June when realizing that the Zykov multiplication (which I had been proud of discovering in early January) has a dual which is known as the Sabidussi strong graph multiplication. But this connection also was a good thing as it freed time for other explorations. The main result of the paper is the following theorem which shows that the strong product is compatible with cohomology. Define the Poincaré polynomial , where

are the Betti numbers of G. Then

| The Euler characteristic is multiplicative with respect to the strong graph multiplication ☒. More generally, the Kuenneth formula holds for cohomology. The Poincaré polynomial satisfies p(G ☒ H) = p(G) p(H) which complements the trivial p(G + H) = p(G) + p(H). |

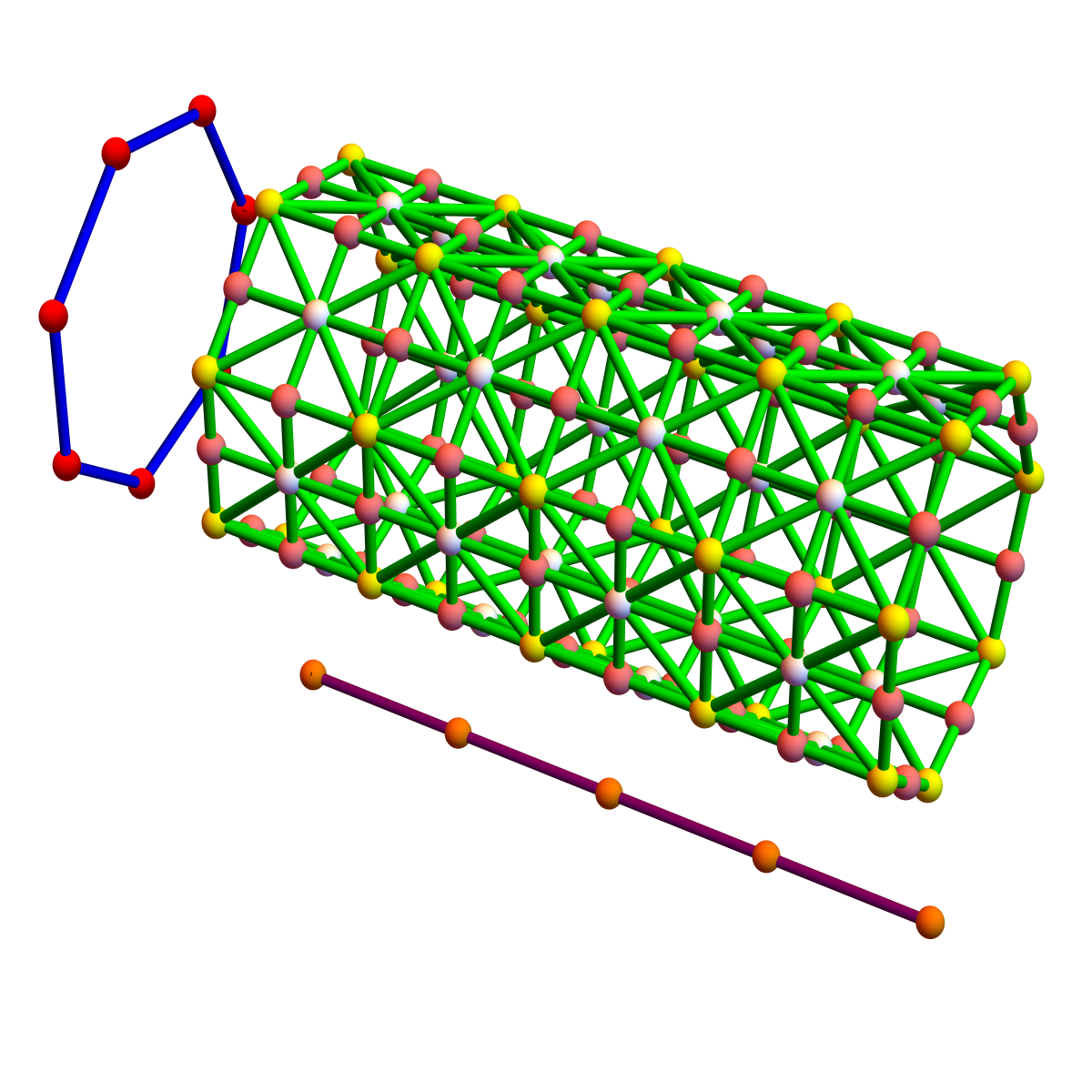

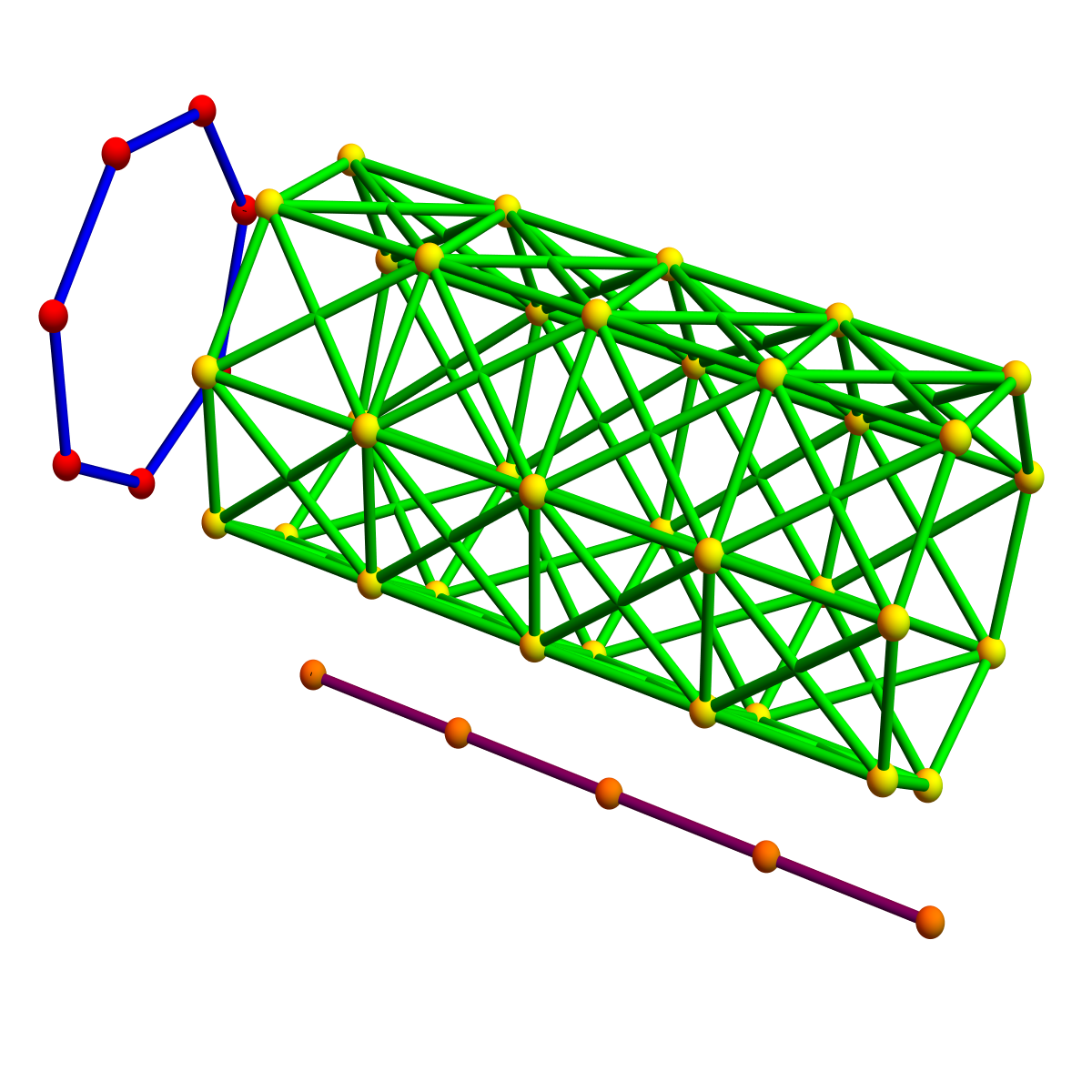

The proof is a deformation argument: we can deform the strong product to a Cartesian Stanley-Reisner type product which satisfies Kuenneth. Modulo homotopy, the strong product is a Cartesian product. If we want to have a product which behaves nicely with respect to dimension, then we have to look at is dual Zykov product. For that Zykov product, the clique number is multiplicative. Here is an illlustration where the result is a cylinder. The two graphs G ☒ H and G x H are homotopic and therefore have the same cohomology and the same Euler characteristic. The following picture illustrates the homotopy between the two products. To the left we see the product which satisfies Kuenneth for graphs (no surprise at all as it is the Standard Kuenneth result when looking at geometric realizations, but the point is that the result is purely finitist and combinatorial even working if one does not accept infinity and especially not Euclidean space). The left product is a Barycentric refinement of a CW complex only as the Cartesian product of two finite abstract simplicial complexes is not a finite abstract simplicial complex any more. Taking the Barycentric refinement distroys associativity as G x K1 is the Barycentric refinement of G and not G. To the right we see the strong product of two graphs. It is associative and leads to the strong ring. The nice thing is that despite the fact that for a topologist it is a bit too fat (the dimension is larger than the Cartesian product dimension), it is homotopic to the Cartesian product to the left and this explains why Kuenneth also holds.

| Cartesian multiplication | Strong multiplication |

|---|---|

|

|

The other products (the weak = cartesian graph product, or tensor = direct product) fail both to have any compatibility. This is illustrated in the paper. In some sense the strong product as a combination of the weak and direct product complements the shortcomings of the two ingredients. It is the magic potion of “weak” and “direct” which makes it “strong” also in a competitive way.

Spectra

Spectral quantities are neither homotopy invariants, nor topological invariants. Compatibility between spectrum and arithmetic is more subtle. Classically, the Cartesian product has a compatibility in that the Cartesian product produces a sum of the eigenvalues. The weak graph product has a compatibility in that the adjacency matrices tensor. We noticed that if we replace the Laplacian with the “connection Laplacian”, then we get compatibility with the simplicial Cartesian product.

| The connection Laplacian of the product complex G x H is the tensor product of the connection Laplacians of the complex G and H. Thefore the spectra multiply. |

It follows that also the connection Laplacians are unimodular for product complexes. The energy theorem extends. As the product is not a simplicial complex any more in general this goes beyond the energy theorem. The arithmetic results allows us to extend the energy theorem without diving into the proof again for discrete CW complexes (which actually would not be a problem as the proof of the unimodularity theorem was built essentially using CW complex constructions. CW complexes are just nice to work with inductively as by definition they glue discs to spheres allowing to extend the theorem cell by cell). Let g=L-1 denote the inverse matrix of L. The entries g(x,y) are Green function entries. They can be interpreted as the potential energy between x and y.

| Also in the ring of CW complexes generated by simplicial complexes, we know that the sum over all g(x,y) is the Euler characteristic. |

To summarize, we have an arithmetic of space which has three angles: the strong (Sabidussi), the join (Zykov) and the simplex (Stanley-Reisner) angle:

- there is compatibility with cohomology in the Sabidussi picture

- there is compatibility with dimension in the Zykov picture

- there is compatibility with spectra in the Stanley-Reisner picture

- there is compatibility with potentials in Stanley-Reisner

- Zykov is dual to Sabidussi

- Sabidussi is homotopic to Stanley-Reisner

- We deal with integral domains but not unique factorization domains.

- Additions have unique prime factorization (a priori not clear for the join operation).

Modulo homotopy and duality we deal with one ring, which rules them all.

[Added June 20: I had missed one important property which is not algebraic but graph theoretic: after consulting more with literature ({Brouwer-Haemers,Schaar-Sonntag-Teichert,Dvetkovic-Doob-Sach, one property known for the strong product was not included yet: the Fredholm adjacency matrices tensor! This is independent of our observation that the Fredholm connection matrices tensor if we take the Cartesian (Stanley-Reisner) product. But the two things can be combined and leads to the important formula:

| (G x H)’ = G’ ☒ H’, |

where G’ is the connection graph of G. This is now a new graph theoretical statement not involving any matrices. But it is nice as is not a graph, only a CW complex (or an element in the general Stanley-Reisner ring. But note that the energy theorem does not extend to the full Stanley-Reisner ring. (The element G=xy+y+yz for example has a complete graph K3 as connection graph where the energy theorem fails as the connection Laplacian is not even invertible.) We see that when going to connection graphs, the Cartesian product corresponds to the strong product. It is amazing how nice the properties of the strong product are: we have compatibility with spectrum and cohomology and the dual ring does magic too as it is a ring with Zykov addition allowing to juggle spheres. It is really a ring which binds them all. End addition]

Computing with Space

The first beginnings of arithmetic built by humans (*) were closely related to physical space. When adding , we an think of 3 as a collection of three pebbles and 5 as a collection of five pebbles. When combining them, we physically produce a larger space, consisting of

pebbles. This pebble addition

can be extended to all finite networks. What is nice about this addition is that it adds up the geometric parts nicely. The number of vertices, the number of edges, the number of triangles etc are all added up. The zero element for this addition is the empty graph.

[(*) added June 30: its actually interesting that we might believe animals count with physical models only (like counting food items for example). But there is reasearch done with crows indicating that they are able to count events, not physical objects. (I learned that from a nice course of Ed Burger Joy of Thinking). Crows can (for very few people at least) keep track how many have entered or left a house. In some sense, this means they can count events, not objects. This is procedural and not physical similarly as 3×4 has the first number 3 behave like a procedure and the second number 4 like an object. But they can also count objects. In some sense they already grasp both aspects of numbers, the procedural (time) component as well as the physical (space) component. Of course only for very small numbers. And crows never evolved to the stage of actually writing down numbers to keep track for example to use physical objects to count people entering or leaving the house. The evolutionary pressure was not string enough for that to happen, as the number of events, where survival would depend on the ability to count more than 2 or 3 people is very low.]

There is an other addition on networks which is dual to that. Take a network of three nodes where all are connected to each other and a network with five nodes. Now take the union and also connect any two nodes from the different networks. This operation is called the join. What is nice about the join addition is that if we add two spheres, we get again a sphere. Add a circle to a circle for example, then you get a three dimensional sphere. More familiar is adding a zero dimensional circle (P2 to a one dimensional circle like C4. This gives the octahedron, which is a two dimensional sphere.The join also adds up nicely the clique numbers. Cliques are maximal complete subgraphs and the number of nodes of such a clique is called the clique number. The clique number is obviously not additive for the union

as the clique number of a disjoint union is the maximum of the clique numbers of each components.

A strange thing happens when we start to multiply pebbles. If we look at , then we add up 3 times four pebbles. If we look at 4*3, then this is something else. We add up 4 times three pebbles. We can arrange the 12 pebbles in a rectangular manner and identify the product as an area which does not change if we turn the rectangle by 90 degrees. Still, it is quite remarkable. In one case we take three times a four pebbles and don’t associate the three with pebbles. The three has an other meaning than 4: it is a number of times, we do an operation, it is not a number of pebbles. Still, we keep this remarkable commutative symmetry, which is not obvious. Actually, we we have learned a hundred years ago on, this commutativity is an illusion! Fundamentally, we don’t have commutativity in basic microscopic structures. In the very small, the arithmetic is not commutative any more. But we don’t have to go down to Planck level to get non-commutativity. It already appars much earlier.

There are operations where the order matters like 34, multiplying four time 3 with itself or then 43, multiplying three times 4 with itself. This appears a bit strange as both addition and multiplication are commutative one could naively think that this would lead to commutativity of the “next level”. It is one of the wonders of arithmetic that two operations like addition and multiplication work well together and that it stops there. Nice algebraic structures with three operations working together are rare. We mean with this that is not the case that addition is operation 0, multiplication an operation 1, leading to an operation 2 etc and higher ring structures with more and more operations.

Somehow this is in the nature of things. We experience such “no go” or “drive to elegance” principles also elsewhere. In Lagrangian-Hamiltonian dynamics for example, the interesting derivatives stop with the second derivative. Yes, one can look at jerk, snap, crackle, pop and Harvard for the third, fourth, fifth, and sixth derivative but they become less and less relevant. It is Newton’s law which tells that the acceleration is a function of the position and velocity. In arithmetic, one can also continue. We can look at exponentiation 34, then repeat exponentiation and define 3^^4 as 3^3^3^3 then then continue with 3^^^^4 etc but also here, both the arithmetic properties are opaque, it is also hardly useful. A very similar phenomenon is seen when looking at data structures: we know that we can organize data as lists, lists of lists etc. Mathematicially these are vectors or matrices (tables of data). Now, one might think that three dimensional arrays of data etc remain important but the theory of relational data bases has shown that one can do very well with “tables” alone. Matrices are very powerful also in mathematics and higher order tensors are less used or then reduced to matrices, similarly as a database is normalized.

Still, it is not excluded that in some future mathematics, somebody can build a theory of a structure (R,+,x,*) with three operations in harmony and which is both useful as well as beautiful. Similarly as general relativity uses tensors which needs tensors more complicated than matrices like the Christophel symbols which are essential for example to describe geodesics

. (In the arithmetic case with three operations, we don’t mean silly cases where for example the scalar multiplication is counted as an other operation like in a matrix algebra, where one has both the matrix multiplication, the matrix addition and the scalar multiplication). Mathematics is full of ugly hacks. But somehow, nature (in the form of physics) picks the most beautiful structures. In the case of matrix algebra, it is a nice ring, which is essential everywhere, from classical mechanics, to electromagnetism, relativity and quantum mechanics.

Extending number systems

The appearance of 0 in our number system appeared surprisingly late. As the Sumerian mathematicians just left an empty place holder, the sign for 0 seems have appeared in India and the South Americas independently (Mayan mathematics) but also in Quipus, not pebble but knot based systems. One having a zero, one gets quickly comfortable with negative numbers, which especially in trading appear as “loans” or in temperature scales as “below zero temperature”. The fractions had it easier. They appeared much earlier. Greeks and Egyptian mathematicians knew them. The real numbers, discovered first famously by the Greeks (leading them into a crysis) was a big deal. For us they are now very natural but it’s still far away afrom the Stick, Pebble, marks or knot mathematics which came before. But the main motivation to introduce real numbers were measurements: the area of the disk, the length of the diagonal of a square are examples of numbers which are not rational.

The field of networks

Of course, once one has a ring of operations, one can build a field and complete it algebraically or topologically or both. The ring aspect of networks seems not have appeared in the literature. It requires first to extend the additive monoids to a group. This is no problem and it was Grothendieck who formalized this with full generality. So, what are negative networks? Its best to see this in the Sabidussi ring, where the addition is the disjoint union. Now, every signed ring is a collection of networks, where some connectivity components have a negative sign. And then there is the rule (equivalence relation) which tells that if we see two isomorphic connectivity components of different sign, then they can be deleted.

This can also be illustrated in the pebble picture: there are positive pebbles and negative pebbles. We can get from one pebble configuration to an other one by removing a positive and negative pebble.

June 23: Here is a picture of some blackboard musings in the field of networks. One can imagine being Hippasus and show how that some rings are “irrational”. (I hope the gods do not punish for that):