One dimension

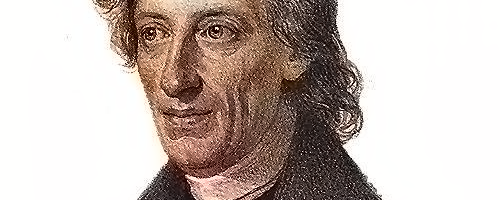

I have always also fun teaching single variable calculus. This year, the course site is here. One of the great pioneers in real analysis was Bernard Bolzano. He was not recognized enough during his life but he was one of the first to realize that theorems about continuous functions are not totally obvious. There are various theorems associated with him: the marvelous intermediate value theorem, the extremal value theorem and the Bolzano-Weierstrass theorem. It looks as if the theorems are about single variable, they are however rather general. Let X be a topological space and a continuous map and

a continuous curve, then

is a real valued map on the interval [a,b]. The topological space itself can be rather arbitrary, it could be a manifold, a function space, a crazy fractal, it does not matter. The fact remains that in the end we are dealing with a continuous function on an interval.

Discrete derivatives

In quantum calculus, there are no limits. In some sense, the modern set-theoretical setup of topology, there are no limits. A function from a topological space to a an other topological space

is continuous if the inverse of any

. But one can also get rid of limits by replacing derivatives with differences. Given a positive Planck constant

, one can look at the derivative

. We can consider this a discrete derivative. The mathematics of discrete derivatives and discrete integrals is surprisingly close to the mathematics when we take the limit h ->0. We just have to deform the algebra of polynomials and define

and

to get the same differentiation rules than the ones we are familiar with. We have then however a calculus which works for arbitrary functions and not only for smooth functions.

Discrete critical points always lead to max or min

Whenever teaching single variable calculus the last 10 years, I mentioned the following discrete variant of the inverse of Fermat’s theorem which is wonderful because it is classically not true as the example shows.

Theorem: If a is a discrete h-critical point, then there exists a point x in [a,a+h] where f(x) is a local maximum or a local minimum.

This is a direct consequence of the Bolzano extremal value theorem because a continuous function takes a maximum and minimum on a compact interval [a,a+h] and that either f is constant or then has either a maximum or minimum in the interior. If there exists a point in [a,a+h] where the value is larger than f(a)=f(a+h), then there is a local maximum in the interior (a,a+h). If there exists a point where the value is smaller than f(a)=f(a+h), then there is a local minimum. If there is no value where f(x) is larger or smaller than f(a)=f(a+h), then f(x) is constant on the interval and every point in the interior is a local maximum and local minimum. The Bolzano extremal value theorem is actually not so simple, as it is not constructive. So, it is better to look for a proof of the above theorem which is constructive. That is indeed possible, and related to an other theorem of Bolzano, the intermediate value theorem. The above theorems also very closely related to the extremal value theorem.

A constructive proof

The existence of maxima or minima is actually constructive. This means that there is an algorithm which produces the maximum or minimum: we start with the interval and

. Now define

. Look at the function

. This function has the property that

. By the Bolzano intermediate value theorem (this is the reason why I mention the above theorem usually in the context of this beautiful theorem) [PDF], there is a point

, where

. This means we have now an interval

which has the same property as before that its discrete derivative is zero at

but with half the Planck constant. We can continue like that and get a nested sequence of intervals

of size

on which the

derivative is zero. The intersection of all these intervals is now either a maximum or minimum. Pretty cool because this is contructive, like the intermediate value theorem (which can be proven by a divide and conquer approach leading to a solution very fast). Note that we have for general continuous functions a theorem telling that the vanishing of a derivative assures the existence of a maximum or minimum. This is remarkable because it is wrong in the classical case even for real-analytic functions like

.

Other places with more regularity

When working in combinatorics, I have seen many instances, where things get more regular. An example is that connection Laplacians of graphs are always invertible. An other example is that if we have a discrete d-manifold, which is a graph for which every unit sphere is a (d-1)-sphere and a d-sphere is a discrete d-manifold for which removing one vertex renders the graph contractible, then given a function f on the vertex set which is a coloring (adjacent points have different values), then every level set f=c is either empty or a discrete (d-1)-manifold. See this article. (Also this article is so simple (Mathematicians would curse it with the word “trivial”) that it is utterly unpublishable. Still, it is a small brick in a bigger building which is extremely exciting. ) But it is really remarkable, if you think what crazy things can happen in the continuum. Look at a level surface of a function f even in three dimensional Euclidean space. This can be extremely complicated. It is a variety or worse can have terrible singularities even in the smooth category as catastrophe theory has shown. In the discrete, there is only regularity. Such epiphanies make you a believer in the discrete. The reason is not some sort of Democritian philosophy (I have a hunch that things are discrete) but the emergence of nice mathematical theorems (even if they are extremely simple). Above all mathematicians, Bolzano would have liked it.